Application Level Tracing library

Overview

ESP-IDF provides a useful feature for program behavior analysis: application level tracing. It is implemented in the corresponding library and can be enabled in menuconfig. This feature allows to transfer arbitrary data between host and ESP32 via JTAG, UART, or USB interfaces with small overhead on program execution. It is possible to use JTAG and UART interfaces simultaneously. The UART interface is mostly used for connection with SEGGER SystemView tool (see SystemView).

Developers can use this library to send application-specific state of execution to the host and receive commands or other types of information from the opposite direction at runtime. The main use cases of this library are:

Collecting application-specific data. See Application Specific Tracing.

Lightweight logging to the host. See Logging to Host.

System behavior analysis. See System Behavior Analysis with SEGGER SystemView.

Source code coverage. See Gcov (Source Code Coverage).

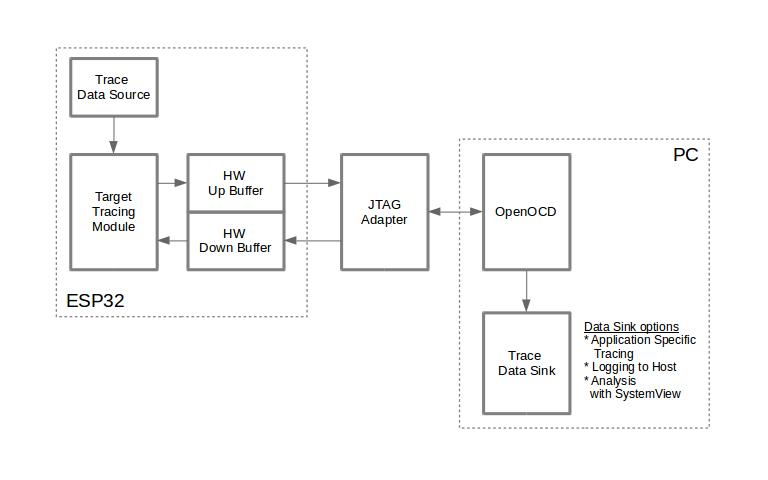

Tracing components used when working over JTAG interface are shown in the figure below.

Tracing Components Used When Working Over JTAG

Modes of Operation

The library supports two modes of operation:

Post-mortem mode: This is the default mode. The mode does not need interaction with the host side. In this mode, tracing module does not check whether the host has read all the data from HW UP BUFFER, but directly overwrites old data with the new ones. This mode is useful when only the latest trace data is interesting to the user, e.g., for analyzing program’s behavior just before the crash. The host can read the data later on upon user request, e.g., via special OpenOCD command in case of working via JTAG interface.

Streaming mode: Tracing module enters this mode when the host connects to ESP32. In this mode, before writing new data to HW UP BUFFER, the tracing module checks that whether there is enough space in it and if necessary, waits for the host to read data and free enough memory. Maximum waiting time is controlled via timeout values passed by users to corresponding API routines. So when application tries to write data to the trace buffer using the finite value of the maximum waiting time, it is possible that this data will be dropped. This is especially true for tracing from time critical code (ISRs, OS scheduler code, etc.) where infinite timeouts can lead to system malfunction. In order to avoid loss of such critical data, developers can enable additional data buffering via menuconfig option CONFIG_APPTRACE_PENDING_DATA_SIZE_MAX. This macro specifies the size of data which can be buffered in above conditions. The option can also help to overcome situation when data transfer to the host is temporarily slowed down, e.g., due to USB bus congestions. But it will not help when the average bitrate of the trace data stream exceeds the hardware interface capabilities.

Configuration Options and Dependencies

Using of this feature depends on two components:

Host side: Application tracing is done over JTAG, so it needs OpenOCD to be set up and running on host machine. For instructions on how to set it up, please see JTAG Debugging for details.

Target side: Application tracing functionality can be enabled in menuconfig. Please go to

Component config>Application Level Tracingmenu, which allows selecting destination for the trace data (hardware interface for transport: JTAG or/and UART). Choosing any of the destinations automatically enables theCONFIG_APPTRACE_ENABLEoption. For UART interfaces, users have to define baud rate, TX and RX pins numbers, and additional UART-related parameters.

Note

In order to achieve higher data rates and minimize the number of dropped packets, it is recommended to optimize the setting of JTAG clock frequency, so that it is at maximum and still provides stable operation of JTAG. See Optimize JTAG speed.

There are two additional menuconfig options not mentioned above:

Threshold for flushing last trace data to host on panic (CONFIG_APPTRACE_POSTMORTEM_FLUSH_THRESH). This option is necessary due to the nature of working over JTAG. In this mode, trace data is exposed to the host in 16 KB blocks. In post-mortem mode, when one block is filled, it is exposed to the host and the previous one becomes unavailable. In other words, the trace data is overwritten in 16 KB granularity. On panic, the latest data from the current input block is exposed to the host and the host can read them for post-analysis. System panic may occur when a very small amount of data are not exposed to the host yet. In this case, the previous 16 KB of collected data will be lost and the host will see the latest, but very small piece of the trace. It can be insufficient to diagnose the problem. This menuconfig option allows avoiding such situations. It controls the threshold for flushing data in case of apanic. For example, users can decide that it needs no less than 512 bytes of the recent trace data, so if there is less then 512 bytes of pending data at the moment of panic, they will not be flushed and will not overwrite the previous 16 KB. The option is only meaningful in post-mortem mode and when working over JTAG.

Timeout for flushing last trace data to host on panic (CONFIG_APPTRACE_ONPANIC_HOST_FLUSH_TMO). The option is only meaningful in streaming mode and it controls the maximum time that the tracing module will wait for the host to read the last data in case of panic.

UART RX/TX ring buffer size (CONFIG_APPTRACE_UART_TX_BUFF_SIZE). The size of the buffer depends on the amount of data transfered through the UART.

UART TX message size (CONFIG_APPTRACE_UART_TX_MSG_SIZE). The maximum size of the single message to transfer.

How to Use This Library

This library provides APIs for transferring arbitrary data between the host and ESP32. When enabled in menuconfig, the target application tracing module is initialized automatically at the system startup, so all what the user needs to do is to call corresponding APIs to send, receive or flush the data.

Application Specific Tracing

In general, users should decide what type of data should be transferred in every direction and how these data must be interpreted (processed). The following steps must be performed to transfer data between the target and the host:

On the target side, users should implement algorithms for writing trace data to the host. Piece of code below shows an example on how to do this.

#include "esp_app_trace.h" ... char buf[] = "Hello World!"; esp_err_t res = esp_apptrace_write(ESP_APPTRACE_DEST_TRAX, buf, strlen(buf), ESP_APPTRACE_TMO_INFINITE); if (res != ESP_OK) { ESP_LOGE(TAG, "Failed to write data to host!"); return res; }

esp_apptrace_write()function uses memcpy to copy user data to the internal buffer. In some cases, it can be more optimal to useesp_apptrace_buffer_get()andesp_apptrace_buffer_put()functions. They allow developers to allocate buffer and fill it themselves. The following piece of code shows how to do this.#include "esp_app_trace.h" ... int number = 10; char *ptr = (char *)esp_apptrace_buffer_get(ESP_APPTRACE_DEST_TRAX, 32, 100/*tmo in us*/); if (ptr == NULL) { ESP_LOGE(TAG, "Failed to get buffer!"); return ESP_FAIL; } sprintf(ptr, "Here is the number %d", number); esp_err_t res = esp_apptrace_buffer_put(ESP_APPTRACE_DEST_TRAX, ptr, 100/*tmo in us*/); if (res != ESP_OK) { /* in case of error host tracing tool (e.g., OpenOCD) will report incomplete user buffer */ ESP_LOGE(TAG, "Failed to put buffer!"); return res; }

Also according to his needs, the user may want to receive data from the host. Piece of code below shows an example on how to do this.

#include "esp_app_trace.h" ... char buf[32]; char down_buf[32]; size_t sz = sizeof(buf); /* config down buffer */ esp_apptrace_down_buffer_config(down_buf, sizeof(down_buf)); /* check for incoming data and read them if any */ esp_err_t res = esp_apptrace_read(ESP_APPTRACE_DEST_TRAX, buf, &sz, 0/*do not wait*/); if (res != ESP_OK) { ESP_LOGE(TAG, "Failed to read data from host!"); return res; } if (sz > 0) { /* we have data, process them */ ... }

esp_apptrace_read()function uses memcpy to copy host data to user buffer. In some casesm it can be more optimal to useesp_apptrace_down_buffer_get()andesp_apptrace_down_buffer_put()functions. They allow developers to occupy chunk of read buffer and process it in-place. The following piece of code shows how to do this.#include "esp_app_trace.h" ... char down_buf[32]; uint32_t *number; size_t sz = 32; /* config down buffer */ esp_apptrace_down_buffer_config(down_buf, sizeof(down_buf)); char *ptr = (char *)esp_apptrace_down_buffer_get(ESP_APPTRACE_DEST_TRAX, &sz, 100/*tmo in us*/); if (ptr == NULL) { ESP_LOGE(TAG, "Failed to get buffer!"); return ESP_FAIL; } if (sz > 4) { number = (uint32_t *)ptr; printf("Here is the number %d", *number); } else { printf("No data"); } esp_err_t res = esp_apptrace_down_buffer_put(ESP_APPTRACE_DEST_TRAX, ptr, 100/*tmo in us*/); if (res != ESP_OK) { /* in case of error host tracing tool (e.g., OpenOCD) will report incomplete user buffer */ ESP_LOGE(TAG, "Failed to put buffer!"); return res; }

The next step is to build the program image and download it to the target as described in the Getting Started Guide.

Run OpenOCD (see JTAG Debugging).

Connect to OpenOCD telnet server. It can be done using the following command in terminal

telnet <oocd_host> 4444. If telnet session is opened on the same machine which runs OpenOCD, you can uselocalhostas<oocd_host>in the command above.Start trace data collection using special OpenOCD command. This command will transfer tracing data and redirect them to the specified file or socket (currently only files are supported as trace data destination). For description of the corresponding commands, see OpenOCD Application Level Tracing Commands.

The final step is to process received data. Since the format of data is defined by users, the processing stage is out of the scope of this document. Good starting points for data processor are python scripts in

$IDF_PATH/tools/esp_app_trace:apptrace_proc.py(used for feature tests) andlogtrace_proc.py(see more details in section Logging to Host).

OpenOCD Application Level Tracing Commands

HW UP BUFFER is shared between user data blocks and the filling of the allocated memory is performed on behalf of the API caller (in task or ISR context). In multithreading environment, it can happen that the task/ISR which fills the buffer is preempted by another high priority task/ISR. So it is possible that the user data preparation process is not completed at the moment when that chunk is read by the host. To handle such conditions, the tracing module prepends all user data chunks with header which contains the allocated user buffer size (2 bytes) and the length of the actually written data (2 bytes). So the total length of the header is 4 bytes. OpenOCD command which reads trace data reports error when it reads incomplete user data chunk, but in any case, it puts the contents of the whole user chunk (including unfilled area) to the output file.

Below is the description of available OpenOCD application tracing commands.

Note

Currently, OpenOCD does not provide commands to send arbitrary user data to the target.

Command usage:

esp apptrace [start <options>] | [stop] | [status] | [dump <cores_num> <outfile>]

Sub-commands:

startStart tracing (continuous streaming).

stopStop tracing.

statusGet tracing status.

dumpDump all data from (post-mortem dump).

Start command syntax:

start <outfile> [poll_period [trace_size [stop_tmo [wait4halt [skip_size]]]]

outfilePath to file to save data from both CPUs. This argument should have the following format:

file://path/to/file.poll_periodData polling period (in ms) for available trace data. If greater than 0, then command runs in non-blocking mode. By default, 1 ms.

trace_sizeMaximum size of data to collect (in bytes). Tracing is stopped after specified amount of data is received. By default, -1 (trace size stop trigger is disabled).

stop_tmoIdle timeout (in sec). Tracing is stopped if there is no data for specified period of time. By default, -1 (disable this stop trigger). Optionally set it to value longer than longest pause between tracing commands from target.

wait4haltIf 0, start tracing immediately, otherwise command waits for the target to be halted (after reset, by breakpoint etc.) and then automatically resumes it and starts tracing. By default, 0.

skip_sizeNumber of bytes to skip at the start. By default, 0.

Note

If poll_period is 0, OpenOCD telnet command line will not be available until tracing is stopped. You must stop it manually by resetting the board or pressing Ctrl+C in OpenOCD window (not one with the telnet session). Another option is to set trace_size and wait until this size of data is collected. At this point, tracing stops automatically.

Command usage examples:

Collect 2048 bytes of tracing data to the file

trace.log. The file will be saved in theopenocd-esp32directory.esp apptrace start file://trace.log 1 2048 5 0 0

The tracing data will be retrieved and saved in non-blocking mode. This process will stop automatically after 2048 bytes are collected, or if no data are available for more than 5 seconds.

Note

Tracing data is buffered before it is made available to OpenOCD. If you see “Data timeout!” message, then it is likely that the target is not sending enough data to empty the buffer to OpenOCD before the timeout. Either increase the timeout or use the function

esp_apptrace_flush()to flush the data on specific intervals.Retrieve tracing data indefinitely in non-blocking mode.

esp apptrace start file://trace.log 1 -1 -1 0 0

There is no limitation on the size of collected data and there is no data timeout set. This process may be stopped by issuing

esp apptrace stopcommand on OpenOCD telnet prompt, or by pressing Ctrl+C in OpenOCD window.Retrieve tracing data and save them indefinitely.

esp apptrace start file://trace.log 0 -1 -1 0 0

OpenOCD telnet command line prompt will not be available until tracing is stopped. To stop tracing, press Ctrl+C in the OpenOCD window.

Wait for the target to be halted. Then resume the target’s operation and start data retrieval. Stop after collecting 2048 bytes of data:

esp apptrace start file://trace.log 0 2048 -1 1 0

To configure tracing immediately after reset, use the OpenOCD

reset haltcommand.

Logging to Host

ESP-IDF implements a useful feature: logging to the host via application level tracing library. This is a kind of semihosting when all ESP_LOGx calls send strings to be printed to the host instead of UART. This can be useful because “printing to host” eliminates some steps performed when logging to UART. Most part of the work is done on the host.

By default, ESP-IDF’s logging library uses vprintf-like function to write formatted output to dedicated UART. In general, it involves the following steps:

Format string is parsed to obtain type of each argument.

According to its type, every argument is converted to string representation.

Format string combined with converted arguments is sent to UART.

Though the implementation of the vprintf-like function can be optimized to a certain level, all steps above have to be performed in any case and every step takes some time (especially item 3). So it frequently occurs that with additional log added to the program to identify the problem, the program behavior is changed and the problem cannot be reproduced. And in the worst cases, the program cannot work normally at all and ends up with an error or even hangs.

Possible ways to overcome this problem are to use higher UART bitrates (or another faster interface) and/or to move string formatting procedure to the host.

The application level tracing feature can be used to transfer log information to the host using esp_apptrace_vprintf function. This function does not perform full parsing of the format string and arguments. Instead, it just calculates the number of arguments passed and sends them along with the format string address to the host. On the host, log data is processed and printed out by a special Python script.

Limitations

Current implementation of logging over JTAG has some limitations:

No support for tracing from

ESP_EARLY_LOGxmacros.No support for printf arguments whose size exceeds 4 bytes (e.g.,

doubleanduint64_t).Only strings from the .rodata section are supported as format strings and arguments.

The maximum number of printf arguments is 256.

How To Use It

In order to use logging via trace module, users need to perform the following steps:

On the target side, the special vprintf-like function

esp_apptrace_vprintfneeds to be installed. It sends log data to the host. Example code is provided in system/app_trace_to_host.Follow instructions in items 2-5 in Application Specific Tracing.

To print out collected log records, run the following command in terminal:

$IDF_PATH/tools/esp_app_trace/logtrace_proc.py /path/to/trace/file /path/to/program/elf/file.

Log Trace Processor Command Options

Command usage:

logtrace_proc.py [-h] [--no-errors] <trace_file> <elf_file>

Positional arguments:

trace_filePath to log trace file.

elf_filePath to program ELF file.

Optional arguments:

-h,--helpShow this help message and exit.

--no-errors,-nDo not print errors.

System Behavior Analysis with SEGGER SystemView

Another useful ESP-IDF feature built on top of application tracing library is the system level tracing which produces traces compatible with SEGGER SystemView tool (see SystemView). SEGGER SystemView is a real-time recording and visualization tool that allows to analyze runtime behavior of an application. It is possible to view events in real-time through the UART interface.

How To Use It

Support for this feature is enabled by Component config > Application Level Tracing > FreeRTOS SystemView Tracing (CONFIG_APPTRACE_SV_ENABLE) menuconfig option. There are several other options enabled under the same menu:

SytemView destination. Select the destination interface: JTAG or UART. In case of UART, it will be possible to connect SystemView application to the ESP32 directly and receive data in real-time.

ESP32 timer to use as SystemView timestamp source: (CONFIG_APPTRACE_SV_TS_SOURCE) selects the source of timestamps for SystemView events. In the single core mode, timestamps are generated using ESP32 internal cycle counter running at maximum 240 Mhz (~4 ns granularity). In the dual-core mode, external timer working at 40 Mhz is used, so the timestamp granularity is 25 ns.

Individually enabled or disabled collection of SystemView events (

CONFIG_APPTRACE_SV_EVT_XXX):Trace Buffer Overflow Event

ISR Enter Event

ISR Exit Event

ISR Exit to Scheduler Event

Task Start Execution Event

Task Stop Execution Event

Task Start Ready State Event

Task Stop Ready State Event

Task Create Event

Task Terminate Event

System Idle Event

Timer Enter Event

Timer Exit Event

ESP-IDF has all the code required to produce SystemView compatible traces, so users can just configure necessary project options (see above), build, download the image to target, and use OpenOCD to collect data as described in the previous sections.

Select Pro or App CPU in menuconfig options

Component config>Application Level Tracing>FreeRTOS SystemView Tracingto trace over the UART interface in real-time.

OpenOCD SystemView Tracing Command Options

Command usage:

esp sysview [start <options>] | [stop] | [status]

Sub-commands:

startStart tracing (continuous streaming).

stopStop tracing.

statusGet tracing status.

Start command syntax:

start <outfile1> [outfile2] [poll_period [trace_size [stop_tmo]]]

outfile1Path to file to save data from PRO CPU. This argument should have the following format:

file://path/to/file.outfile2Path to file to save data from APP CPU. This argument should have the following format:

file://path/to/file.poll_periodData polling period (in ms) for available trace data. If greater than 0, then command runs in non-blocking mode. By default, 1 ms.

trace_sizeMaximum size of data to collect (in bytes). Tracing is stopped after specified amount of data is received. By default, -1 (trace size stop trigger is disabled).

stop_tmoIdle timeout (in sec). Tracing is stopped if there is no data for specified period of time. By default, -1 (disable this stop trigger).

Note

If poll_period is 0, OpenOCD telnet command line will not be available until tracing is stopped. You must stop it manually by resetting the board or pressing Ctrl+C in the OpenOCD window (not the one with the telnet session). Another option is to set trace_size and wait until this size of data is collected. At this point, tracing stops automatically.

Command usage examples:

Collect SystemView tracing data to files

pro-cpu.SVDatandapp-cpu.SVDat. The files will be saved inopenocd-esp32directory.esp sysview start file://pro-cpu.SVDat file://app-cpu.SVDat

The tracing data will be retrieved and saved in non-blocking mode. To stop this process, enter

esp sysview stopcommand on OpenOCD telnet prompt, optionally pressing Ctrl+C in the OpenOCD window.Retrieve tracing data and save them indefinitely.

esp sysview start file://pro-cpu.SVDat file://app-cpu.SVDat 0 -1 -1

OpenOCD telnet command line prompt will not be available until tracing is stopped. To stop tracing, press Ctrl+C in the OpenOCD window.

Data Visualization

After trace data are collected, users can use a special tool to visualize the results and inspect behavior of the program.

Unfortunately, SystemView does not support tracing from multiple cores. So when tracing from ESP32 with JTAG interfaces in the dual-core mode, two files are generated: one for PRO CPU and another for APP CPU. Users can load each file into separate instances of the tool. For tracing over UART, users can select Component config > Application Level Tracing > FreeRTOS SystemView Tracing in menuconfig Pro or App to choose which CPU has to be traced.

It is uneasy and awkward to analyze data for every core in separate instance of the tool. Fortunately, there is an Eclipse plugin called Impulse which can load several trace files, thus making it possible to inspect events from both cores in one view. Also, this plugin has no limitation of 1,000,000 events as compared to the free version of SystemView.

Good instructions on how to install, configure, and visualize data in Impulse from one core can be found here.

Note

ESP-IDF uses its own mapping for SystemView FreeRTOS events IDs, so users need to replace the original file mapping $SYSVIEW_INSTALL_DIR/Description/SYSVIEW_FreeRTOS.txt with $IDF_PATH/docs/api-guides/SYSVIEW_FreeRTOS.txt. Also, contents of that IDF-specific file should be used when configuring SystemView serializer using the above link.

Configure Impulse for Dual Core Traces

After installing Impulse and ensuring that it can successfully load trace files for each core in separate tabs, users can add special Multi Adapter port and load both files into one view. To do this, users need to do the following steps in Eclipse:

Open the

Signal Portsview. Go toWindows>Show View>Other menu. Find theSignal Portsview in Impulse folder and double-click it.In the

Signal Portsview, right-clickPortsand selectAdd>New Multi Adapter Port.In the open dialog box, click

Addand selectNew Pipe/File.In the open dialog box, select

SystemView Serializeras Serializer and set path to PRO CPU trace file. ClickOK.Repeat the steps 3-4 for APP CPU trace file.

Double-click the created port. View for this port should open.

Click the

Start/Stop Streamingbutton. Data should be loaded.Use the

Zoom Out,Zoom InandZoom Fitbuttons to inspect data.For settings measurement cursors and other features, please see Impulse documentation).

Note

If you have problems with visualization (no data is shown or strange behaviors of zoom action are observed), you can try to delete current signal hierarchy and double-click on the necessary file or port. Eclipse will ask you to create a new signal hierarchy.

Gcov (Source Code Coverage)

Basics of Gcov and Gcovr

Source code coverage is data indicating the count and frequency of every program execution path that has been taken within a program’s runtime. Gcov is a GCC tool that, when used in concert with the compiler, can generate log files indicating the execution count of each line of a source file. The Gcovr tool is a utility for managing Gcov and generating summarized code coverage results.

Generally, using Gcov to compile and run programs on the host will undergo these steps:

Compile the source code using GCC with the

--coverageoption enabled. This will cause the compiler to generate a.gcnonotes files during compilation. The notes files contain information to reconstruct execution path block graphs and map each block to source code line numbers. Each source file compiled with the--coverageoption should have their own.gcnofile of the same name (e.g., amain.cwill generate amain.gcnowhen compiled).Execute the program. During execution, the program should generate

.gcdadata files. These data files contain the counts of the number of times an execution path was taken. The program will generate a.gcdafile for each source file compiled with the--coverageoption (e.g.,main.cwill generate amain.gcda).Gcov or Gcovr can be used to generate a code coverage based on the

.gcno,.gcda, and source files. Gcov will generate a text-based coverage report for each source file in the form of a.gcovfile, whilst Gcovr will generate a coverage report in HTML format.

Gcov and Gcovr in ESP-IDF

Using Gcov in ESP-IDF is complicated due to the fact that the program is running remotely from the host (i.e., on the target). The code coverage data (i.e., the .gcda files) is initially stored on the target itself. OpenOCD is then used to dump the code coverage data from the target to the host via JTAG during runtime. Using Gcov in ESP-IDF can be split into the following steps.

Setting Up a Project for Gcov

Compiler Option

In order to obtain code coverage data in a project, one or more source files within the project must be compiled with the --coverage option. In ESP-IDF, this can be achieved at the component level or the individual source file level:

To cause all source files in a component to be compiled with the

--coverageoption, you can addtarget_compile_options(${COMPONENT_LIB} PRIVATE --coverage)to theCMakeLists.txtfile of the component.To cause a select number of source files (e.g.,

source1.candsource2.c) in the same component to be compiled with the--coverageoption, you can addset_source_files_properties(source1.c source2.c PROPERTIES COMPILE_FLAGS --coverage)to theCMakeLists.txtfile of the component.

When a source file is compiled with the --coverage option (e.g., gcov_example.c), the compiler will generate the gcov_example.gcno file in the project’s build directory.

Project Configuration

Before building a project with source code coverage, make sure that the following project configuration options are enabled by running idf.py menuconfig.

Enable the application tracing module by selecting

Trace Memoryfor the CONFIG_APPTRACE_DESTINATION1 option.Enable Gcov to the host via the CONFIG_APPTRACE_GCOV_ENABLE.

Dumping Code Coverage Data

Once a project has been complied with the --coverage option and flashed onto the target, code coverage data will be stored internally on the target (i.e., in trace memory) whilst the application runs. The process of transferring code coverage data from the target to the host is known as dumping.

The dumping of coverage data is done via OpenOCD (see JTAG Debugging on how to setup and run OpenOCD). A dump is triggered by issuing commands to OpenOCD, therefore a telnet session to OpenOCD must be opened to issue such commands (run telnet localhost 4444). Note that GDB could be used instead of telnet to issue commands to OpenOCD, however all commands issued from GDB will need to be prefixed as mon <oocd_command>.

When the target dumps code coverage data, the .gcda files are stored in the project’s build directory. For example, if gcov_example_main.c of the main component is compiled with the --coverage option, then dumping the code coverage data would generate a gcov_example_main.gcda in build/esp-idf/main/CMakeFiles/__idf_main.dir/gcov_example_main.c.gcda. Note that the .gcno files produced during compilation are also placed in the same directory.

The dumping of code coverage data can be done multiple times throughout an application’s lifetime. Each dump will simply update the .gcda file with the newest code coverage information. Code coverage data is accumulative, thus the newest data will contain the total execution count of each code path over the application’s entire lifetime.

ESP-IDF supports two methods of dumping code coverage data form the target to the host:

Instant Run-Time Dumpgit

Hard-coded Dump

Instant Run-Time Dump

An Instant Run-Time Dump is triggered by calling the ESP32 gcov OpenOCD command (via a telnet session). Once called, OpenOCD will immediately preempt the ESP32’s current state and execute a built-in ESP-IDF Gcov debug stub function. The debug stub function will handle the dumping of data to the host. Upon completion, the ESP32 will resume its current state.

Hard-coded Dump

A Hard-coded Dump is triggered by the application itself by calling esp_gcov_dump() from somewhere within the application. When called, the application will halt and wait for OpenOCD to connect and retrieve the code coverage data. Once esp_gcov_dump() is called, the host must execute the esp gcov dump OpenOCD command (via a telnet session). The esp gcov dump command will cause OpenOCD to connect to the ESP32, retrieve the code coverage data, then disconnect from the ESP32, thus allowing the application to resume. Hard-coded Dumps can also be triggered multiple times throughout an application’s lifetime.

Hard-coded dumps are useful if code coverage data is required at certain points of an application’s lifetime by placing esp_gcov_dump() where necessary (e.g., after application initialization, during each iteration of an application’s main loop).

GDB can be used to set a breakpoint on esp_gcov_dump(), then call mon esp gcov dump automatically via the use a gdbinit script (see Using GDB from Command Line).

The following GDB script will add a breakpoint at esp_gcov_dump(), then call the mon esp gcov dump OpenOCD command.

b esp_gcov_dump

commands

mon esp gcov dump

end

Note

Note that all OpenOCD commands should be invoked in GDB as: mon <oocd_command>.

Generating Coverage Report

Once the code coverage data has been dumped, the .gcno, .gcda and the source files can be used to generate a code coverage report. A code coverage report is simply a report indicating the number of times each line in a source file has been executed.

Both Gcov and Gcovr can be used to generate code coverage reports. Gcov is provided along with the Xtensa toolchain, whilst Gcovr may need to be installed separately. For details on how to use Gcov or Gcovr, refer to Gcov documentation and Gcovr documentation.

Adding Gcovr Build Target to Project

To make report generation more convenient, users can define additional build targets in their projects such that the report generation can be done with a single build command.

Add the following lines to the CMakeLists.txt file of your project.

include($ENV{IDF_PATH}/tools/cmake/gcov.cmake)

idf_create_coverage_report(${CMAKE_CURRENT_BINARY_DIR}/coverage_report)

idf_clean_coverage_report(${CMAKE_CURRENT_BINARY_DIR}/coverage_report)

The following commands can now be used:

cmake --build build/ --target gcovr-reportwill generate an HTML coverage report in$(BUILD_DIR_BASE)/coverage_report/htmldirectory.

cmake --build build/ --target cov-data-cleanwill remove all coverage data files.