ESP-IDF FreeRTOS SMP Changes¶

Overview¶

The ESP-IDF FreeRTOS is a modified version of vanilla FreeRTOS which supports symmetric multiprocessing (SMP). ESP-IDF FreeRTOS is based on the Xtensa port of FreeRTOS v8.2.0. This guide outlines the major differences between vanilla FreeRTOS and ESP-IDF FreeRTOS. The API reference for vanilla FreeRTOS can be found via https://www.freertos.org/a00106.html

For information regarding features that are exclusive to ESP-IDF FreeRTOS, see ESP-IDF FreeRTOS Additions.

Backported Features: Although ESP-IDF FreeRTOS is based on the Xtensa port of FreeRTOS v8.2.0, a number of FreeRTOS v9.0.0 features have been backported to ESP-IDF.

Task Deletion: Task deletion behavior has been backported from FreeRTOS

v9.0.0 and modified to be SMP compatible. Task memory will be freed immediately

when vTaskDelete() is called to delete a task that is not currently running

and not pinned to the other core. Otherwise, freeing of task memory will still

be delegated to the Idle Task.

Thread Local Storage Pointers & Deletion Callbacks: ESP-IDF FreeRTOS has backported the Thread Local

Storage Pointers (TLSP) feature. However the extra feature of Deletion Callbacks has been

added. Deletion callbacks are called automatically during task deletion and are

used to free memory pointed to by TLSP. Call

vTaskSetThreadLocalStoragePointerAndDelCallback() to set TLSP and Deletion

Callbacks.

Configuring ESP-IDF FreeRTOS: Several aspects of ESP-IDF FreeRTOS can be

set in the project configuration (idf.py menuconfig) such as running ESP-IDF in

Unicore (single core) Mode, or configuring the number of Thread Local Storage Pointers

each task will have.

Backported Features¶

The following features have been backported from FreeRTOS v9.0.0 to ESP-IDF.

Static Alocation¶

This feature has been backported from FreeRTOS v9.0.0 to ESP-IDF. The CONFIG_FREERTOS_SUPPORT_STATIC_ALLOCATION option must be enabled in menuconfig in order for static allocation functions to be available. Once enabled, the following functions can be called…

Other Features¶

Backporting Notes¶

1) xTaskCreateStatic() has been made SMP compatible in a similar

fashion to xTaskCreate() (see Tasks and Task Creation). Therefore

xTaskCreateStaticPinnedToCore() can also be called.

2) Although vanilla FreeRTOS allows the Timer feature’s daemon task to

be statically allocated, the daemon task is always dynamically allocated in

ESP-IDF. Therefore vApplicationGetTimerTaskMemory does not need to be

defined when using statically allocated timers in ESP-IDF FreeRTOS.

3) The Thread Local Storage Pointer feature has been modified in ESP-IDF

FreeRTOS to include Deletion Callbacks (see Thread Local Storage Pointers & Deletion Callbacks). Therefore

the function vTaskSetThreadLocalStoragePointerAndDelCallback() can also be

called.

Tasks and Task Creation¶

Tasks in ESP-IDF FreeRTOS are designed to run on a particular core, therefore

two new task creation functions have been added to ESP-IDF FreeRTOS by

appending PinnedToCore to the names of the task creation functions in

vanilla FreeRTOS. The vanilla FreeRTOS functions of xTaskCreate()

and xTaskCreateStatic() have led to the addition of

xTaskCreatePinnedToCore() and xTaskCreateStaticPinnedToCore() in

ESP-IDF FreeRTOS (see Backported Features).

For more details see freertos/tasks.c

The ESP-IDF FreeRTOS task creation functions are nearly identical to their

vanilla counterparts with the exception of the extra parameter known as

xCoreID. This parameter specifies the core on which the task should run on

and can be one of the following values.

0pins the task to PRO_CPU

1pins the task to APP_CPU

tskNO_AFFINITYallows the task to be run on both CPUs

For example xTaskCreatePinnedToCore(tsk_callback, “APP_CPU Task”, 1000, NULL, 10, NULL, 1)

creates a task of priority 10 that is pinned to APP_CPU with a stack size

of 1000 bytes. It should be noted that the uxStackDepth parameter in

vanilla FreeRTOS specifies a task’s stack depth in terms of the number of

words, whereas ESP-IDF FreeRTOS specifies the stack depth in terms of bytes.

Note that the vanilla FreeRTOS functions xTaskCreate() and

xTaskCreateStatic() have been defined in ESP-IDF FreeRTOS as inline functions which call

xTaskCreatePinnedToCore() and xTaskCreateStaticPinnedToCore()

respectively with tskNO_AFFINITY as the xCoreID value.

Each Task Control Block (TCB) in ESP-IDF stores the xCoreID as a member.

Hence when each core calls the scheduler to select a task to run, the

xCoreID member will allow the scheduler to determine if a given task is

permitted to run on the core that called it.

Scheduling¶

The vanilla FreeRTOS implements scheduling in the vTaskSwitchContext()

function. This function is responsible for selecting the highest priority task

to run from a list of tasks in the Ready state known as the Ready Tasks List

(described in the next section). In ESP-IDF FreeRTOS, each core will call

vTaskSwitchContext() independently to select a task to run from the

Ready Tasks List which is shared between both cores. There are several

differences in scheduling behavior between vanilla and ESP-IDF FreeRTOS such as

differences in Round Robin scheduling, scheduler suspension, and tick interrupt

synchronicity.

Round Robin Scheduling¶

Given multiple tasks in the Ready state and of the same priority, vanilla FreeRTOS implements Round Robin scheduling between each task. This will result in running those tasks in turn each time the scheduler is called (e.g. every tick interrupt). On the other hand, the ESP-IDF FreeRTOS scheduler may skip tasks when Round Robin scheduling multiple Ready state tasks of the same priority.

The issue of skipping tasks during Round Robin scheduling arises from the way

the Ready Tasks List is implemented in FreeRTOS. In vanilla FreeRTOS,

pxReadyTasksList is used to store a list of tasks that are in the Ready

state. The list is implemented as an array of length configMAX_PRIORITIES

where each element of the array is a linked list. Each linked list is of type

List_t and contains TCBs of tasks of the same priority that are in the

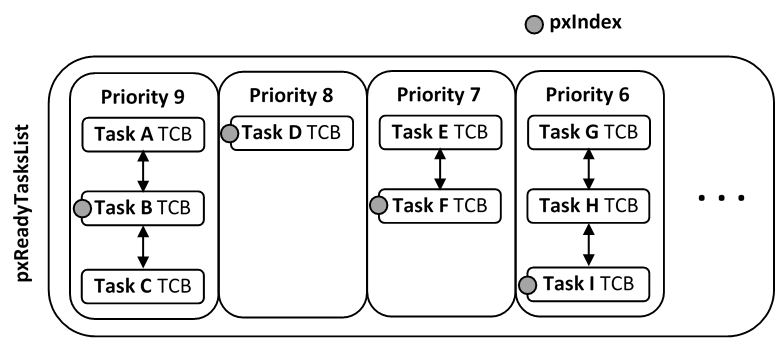

Ready state. The following diagram illustrates the pxReadyTasksList

structure.

Illustration of FreeRTOS Ready Task List Data Structure¶

Each linked list also contains a pxIndex which points to the last TCB

returned when the list was queried. This index allows the vTaskSwitchContext()

to start traversing the list at the TCB immediately after pxIndex hence

implementing Round Robin Scheduling between tasks of the same priority.

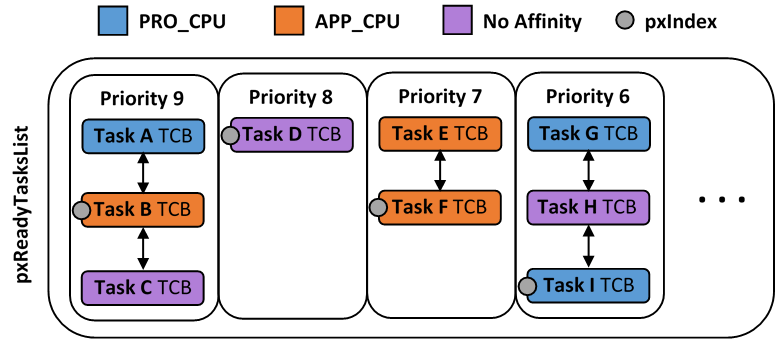

In ESP-IDF FreeRTOS, the Ready Tasks List is shared between cores hence

pxReadyTasksList will contain tasks pinned to different cores. When a core

calls the scheduler, it is able to look at the xCoreID member of each TCB

in the list to determine if a task is allowed to run on calling the core. The

ESP-IDF FreeRTOS pxReadyTasksList is illustrated below.

Illustration of FreeRTOS Ready Task List Data Structure in ESP-IDF¶

Therefore when PRO_CPU calls the scheduler, it will only consider the tasks in blue or purple. Whereas when APP_CPU calls the scheduler, it will only consider the tasks in orange or purple.

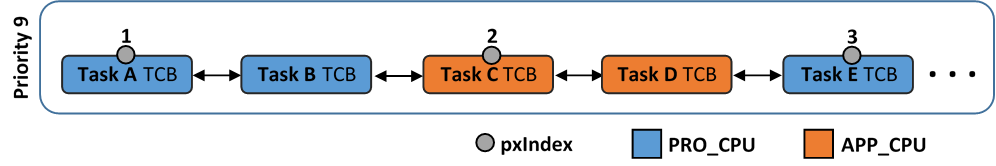

Although each TCB has an xCoreID in ESP-IDF FreeRTOS, the linked list of

each priority only has a single pxIndex. Therefore when the scheduler is

called from a particular core and traverses the linked list, it will skip all

TCBs pinned to the other core and point the pxIndex at the selected task. If

the other core then calls the scheduler, it will traverse the linked list

starting at the TCB immediately after pxIndex. Therefore, TCBs skipped on

the previous scheduler call from the other core would not be considered on the

current scheduler call. This issue is demonstrated in the following

illustration.

Illustration of pxIndex behavior in ESP-IDF FreeRTOS¶

Referring to the illustration above, assume that priority 9 is the highest priority, and none of the tasks in priority 9 will block hence will always be either in the running or Ready state.

1) PRO_CPU calls the scheduler and selects Task A to run, hence moves

pxIndex to point to Task A

2) APP_CPU calls the scheduler and starts traversing from the task after

pxIndex which is Task B. However Task B is not selected to run as it is not

pinned to APP_CPU hence it is skipped and Task C is selected instead.

pxIndex now points to Task C

3) PRO_CPU calls the scheduler and starts traversing from Task D. It skips

Task D and selects Task E to run and points pxIndex to Task E. Notice that

Task B isn’t traversed because it was skipped the last time APP_CPU called

the scheduler to traverse the list.

4) The same situation with Task D will occur if APP_CPU calls the

scheduler again as pxIndex now points to Task E

One solution to the issue of task skipping is to ensure that every task will enter a blocked state so that they are removed from the Ready Task List. Another solution is to distribute tasks across multiple priorities such that a given priority will not be assigned multiple tasks that are pinned to different cores.

Scheduler Suspension¶

In vanilla FreeRTOS, suspending the scheduler via vTaskSuspendAll() will

prevent calls of vTaskSwitchContext from context switching until the

scheduler has been resumed with xTaskResumeAll(). However servicing ISRs

are still permitted. Therefore any changes in task states as a result from the

current running task or ISRSs will not be executed until the scheduler is

resumed. Scheduler suspension in vanilla FreeRTOS is a common protection method

against simultaneous access of data shared between tasks, whilst still allowing

ISRs to be serviced.

In ESP-IDF FreeRTOS, xTaskSuspendAll() will only prevent calls of

vTaskSwitchContext() from switching contexts on the core that called for the

suspension. Hence if PRO_CPU calls vTaskSuspendAll(), APP_CPU will

still be able to switch contexts. If data is shared between tasks that are

pinned to different cores, scheduler suspension is NOT a valid method of

protection against simultaneous access. Consider using critical sections

(disables interrupts) or semaphores (does not disable interrupts) instead when

protecting shared resources in ESP-IDF FreeRTOS.

In general, it’s better to use other RTOS primitives like mutex semaphores to protect

against data shared between tasks, rather than vTaskSuspendAll().

Tick Interrupt Synchronicity¶

In ESP-IDF FreeRTOS, tasks on different cores that unblock on the same tick count might not run at exactly the same time due to the scheduler calls from each core being independent, and the tick interrupts to each core being unsynchronized.

In vanilla FreeRTOS the tick interrupt triggers a call to

xTaskIncrementTick() which is responsible for incrementing the tick

counter, checking if tasks which have called vTaskDelay() have fulfilled

their delay period, and moving those tasks from the Delayed Task List to the

Ready Task List. The tick interrupt will then call the scheduler if a context

switch is necessary.

In ESP-IDF FreeRTOS, delayed tasks are unblocked with reference to the tick interrupt on PRO_CPU as PRO_CPU is responsible for incrementing the shared tick count. However tick interrupts to each core might not be synchronized (same frequency but out of phase) hence when PRO_CPU receives a tick interrupt, APP_CPU might not have received it yet. Therefore if multiple tasks of the same priority are unblocked on the same tick count, the task pinned to PRO_CPU will run immediately whereas the task pinned to APP_CPU must wait until APP_CPU receives its out of sync tick interrupt. Upon receiving the tick interrupt, APP_CPU will then call for a context switch and finally switches contexts to the newly unblocked task.

Therefore, task delays should NOT be used as a method of synchronization between tasks in ESP-IDF FreeRTOS. Instead, consider using a counting semaphore to unblock multiple tasks at the same time.

Critical Sections & Disabling Interrupts¶

Vanilla FreeRTOS implements critical sections in vTaskEnterCritical which

disables the scheduler and calls portDISABLE_INTERRUPTS. This prevents

context switches and servicing of ISRs during a critical section. Therefore,

critical sections are used as a valid protection method against simultaneous

access in vanilla FreeRTOS.

ESP-IDF contains some modifications to work with dual core concurrency, and the dual core API is used even on a single core only chip.

For this reason, ESP-IDF FreeRTOS implements critical sections using special mutexes, referred by portMUX_Type objects on top of specific spinlock component and calls to enter or exit a critical must provide a spinlock object that is associated with a shared resource requiring access protection. When entering a critical section in ESP-IDF FreeRTOS, the calling core will disable its scheduler and interrupts similar to the vanilla FreeRTOS implementation. However, the calling core will also take the locks whilst the other core is left unaffected during the critical section. If the other core attempts to take the spinlock, it will spin until the lock is released. Therefore, the ESP-IDF FreeRTOS implementation of critical sections allows a core to have protected access to a shared resource without disabling the other core. The other core will only be affected if it tries to concurrently access the same resource.

The ESP-IDF FreeRTOS critical section functions have been modified as follows…

taskENTER_CRITICAL(mux),taskENTER_CRITICAL_ISR(mux),portENTER_CRITICAL(mux),portENTER_CRITICAL_ISR(mux)are all macro defined to callvTaskEnterCritical()

taskEXIT_CRITICAL(mux),taskEXIT_CRITICAL_ISR(mux),portEXIT_CRITICAL(mux),portEXIT_CRITICAL_ISR(mux)are all macro defined to callvTaskExitCritical()

portENTER_CRITICAL_SAFE(mux),portEXIT_CRITICAL_SAFE(mux)macro identifies the context of execution, i.e ISR or Non-ISR, and calls appropriate critical section functions (port*_CRITICALin Non-ISR andport*_CRITICAL_ISRin ISR) in order to be in compliance with Vanilla FreeRTOS.

For more details see soc/include/soc/spinlock.h and freertos/tasks.c

It should be noted that when modifying vanilla FreeRTOS code to be ESP-IDF FreeRTOS compatible, it is trivial to modify the type of critical section called as they are all defined to call the same function. As long as the same spinlock is provided upon entering and exiting, the type of call should not matter.

Task Deletion¶

FreeRTOS task deletion prior to v9.0.0 delegated the freeing of task memory

entirely to the Idle Task. Currently, the freeing of task memory will occur

immediately (within vTaskDelete()) if the task being deleted is not currently

running or is not pinned to the other core (with respect to the core

vTaskDelete() is called on). TLSP deletion callbacks will also run immediately

if the same conditions are met.

However, calling vTaskDelete() to delete a task that is either currently

running or pinned to the other core will still result in the freeing of memory

being delegated to the Idle Task.

Thread Local Storage Pointers & Deletion Callbacks¶

Thread Local Storage Pointers (TLSP) are pointers stored directly in the TCB. TLSP allow each task to have its own unique set of pointers to data structures. However task deletion behavior in vanilla FreeRTOS does not automatically free the memory pointed to by TLSP. Therefore if the memory pointed to by TLSP is not explicitly freed by the user before task deletion, memory leak will occur.

ESP-IDF FreeRTOS provides the added feature of Deletion Callbacks. Deletion Callbacks are called automatically during task deletion to free memory pointed to by TLSP. Each TLSP can have its own Deletion Callback. Note that due to the to Task Deletion behavior, there can be instances where Deletion Callbacks are called in the context of the Idle Tasks. Therefore Deletion Callbacks should never attempt to block and critical sections should be kept as short as possible to minimize priority inversion.

Deletion callbacks are of type

void (*TlsDeleteCallbackFunction_t)( int, void * ) where the first parameter

is the index number of the associated TLSP, and the second parameter is the

TLSP itself.

Deletion callbacks are set alongside TLSP by calling

vTaskSetThreadLocalStoragePointerAndDelCallback(). Calling the vanilla

FreeRTOS function vTaskSetThreadLocalStoragePointer() will simply set the

TLSP’s associated Deletion Callback to NULL meaning that no callback will be

called for that TLSP during task deletion. If a deletion callback is NULL,

users should manually free the memory pointed to by the associated TLSP before

task deletion in order to avoid memory leak.

CONFIG_FREERTOS_THREAD_LOCAL_STORAGE_POINTERS in menuconfig can be used to configure the number TLSP and Deletion Callbacks a TCB will have.

For more details see FreeRTOS API reference.

Configuring ESP-IDF FreeRTOS¶

The ESP-IDF FreeRTOS can be configured in the project configuration menu

(idf.py menuconfig) under Component Config/FreeRTOS. The following section

highlights some of the ESP-IDF FreeRTOS configuration options. For a full list of

ESP-IDF FreeRTOS configurations, see FreeRTOS

As ESP32-S2 is a single core SoC, the config item CONFIG_FREERTOS_UNICORE is

always set. This means ESP-IDF only runs on the single CPU. Note that this is not

equivalent to running vanilla FreeRTOS. Behaviors of multiple components in ESP-IDF

will be modified. For more details regarding the effects of running ESP-IDF FreeRTOS

on a single core, search for occurences of CONFIG_FREERTOS_UNICORE in the ESP-IDF components.

CONFIG_FREERTOS_THREAD_LOCAL_STORAGE_POINTERS will define the number of Thread Local Storage Pointers each task will have in ESP-IDF FreeRTOS.

CONFIG_FREERTOS_SUPPORT_STATIC_ALLOCATION will enable the backported

functionality of xTaskCreateStaticPinnedToCore() in ESP-IDF FreeRTOS

CONFIG_FREERTOS_ASSERT_ON_UNTESTED_FUNCTION will trigger a halt in particular functions in ESP-IDF FreeRTOS which have not been fully tested in an SMP context.

CONFIG_FREERTOS_TASK_FUNCTION_WRAPPER will enclose all task functions

within a wrapper function. In the case that a task function mistakenly returns

(i.e. does not call vTaskDelete()), the call flow will return to the

wrapper function. The wrapper function will then log an error and abort the

application, as illustrated below:

E (25) FreeRTOS: FreeRTOS task should not return. Aborting now!

abort() was called at PC 0x40085c53 on core 0