Introduction to LLM Solution

Note

This document is automatically translated using AI. Please excuse any detailed errors. The official English version is still in progress.

LLM Solution Overview

- Solution Features:

The rise of large models like ChatGPT has driven a global AI boom, with cloud platforms upgrading their intelligence and AI technology continuously penetrating various industries. Espressif has built a solid technical foundation with its open, shared, and ecosystem-based intelligent hardware platform.

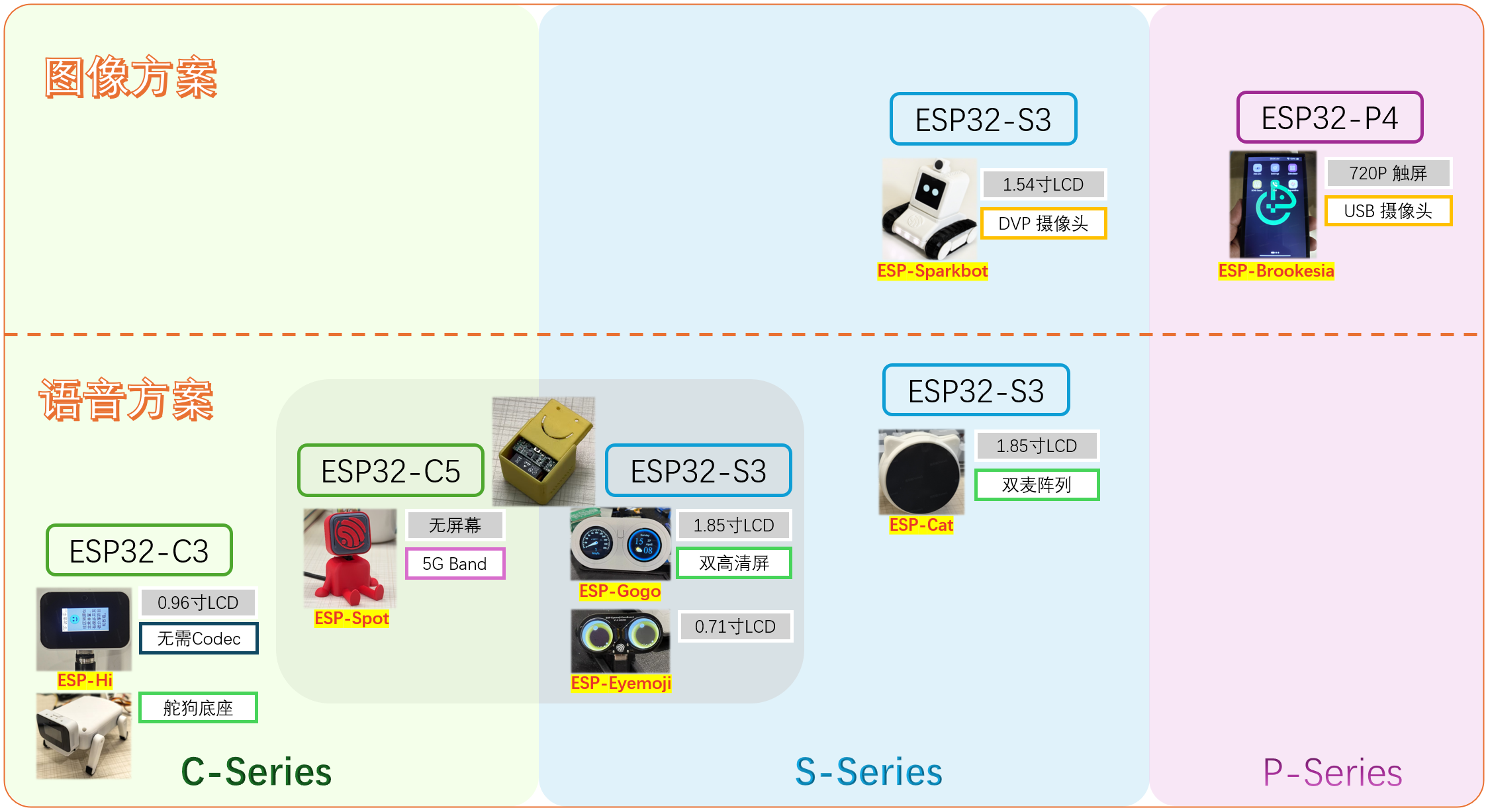

Espressif provides voice and vision large model solution references, with corresponding solutions for low-cost C3, flagship S3, high-performance P4, and dual-band C5.

Espressif is also working on large language model privatization deployment to provide strong support for accelerating customer product implementation.

Solution Overview Diagram:

Voice Solution Details

Positioning |

C Series (ESP32-C3/C5) |

S Series (ESP32-S3) |

|---|---|---|

Entry Level |

ESP-Hi - 0.96” 160 * 80 LCD - No Codec Required |

|

Cost-Effective |

ESP-Spot - No Screen - 5G Band |

ESP-Gogo & ESP-Eyemoji

|

High Performance |

EchoEar - 1.85” 360*360 LCD - Dual Microphone Array |

Vision Solution Details

Positioning |

S Series (ESP32-S3) |

P Series (ESP32-P4) |

|---|---|---|

Cost-Effective |

ESP-Sparkbot - DVP VGA/720p Camera - 1.54” 240*240 LCD |

ESP-Brookesia - USB Camera - 720P Touch Screen |

Chip Feature Comparison

Features |

ESP32-S3 |

ESP32-C3 |

ESP32-C5 |

|---|---|---|---|

Recommended Scenarios |

Multi-modal interaction, voice and vision terminals with display |

Cost-effective lightweight edge applications |

5G advantage scenarios: anti-interference, network compatibility |

Voice |

Multiple microphones, supports AEC echo cancellation |

Single microphone solution, basic collection |

Single microphone solution, basic collection |

Display |

Multiple display solutions & complex interface interaction |

Limited IO resources, restricted display solutions |

Slightly more IO resources than C3, restricted display solutions |

Camera |

Supports SPI / DVP / USB cameras |

Only supports SPI camera |

Only supports SPI camera |

Touch |

Built-in Touch sensor |

No Touch, requires external touch chip |

No Touch, requires external touch chip |

Memory |

Up to 512KB SRAM + PSRAM support |

400KB SRAM |

Up to 384KB SRAM + PSRAM support |

Local AI Capability |

Vector computing instructions + neural network accelerator |

Supports lightweight models |

Supports lightweight models |

Category |

Solution Details |

|---|---|

Cloud Platform Integration |

RainMaker & Matter:

- Provides rapid cloud platform access capability

|

Cloud Server |

MCP & Multi-platform Access & Offline Deployment:

- Supports multiple cloud services and privatization deployment solutions

|

Software Framework |

ESP-Brookesia:

- Provides complete software development framework support

|

Application Solutions |

Audio & Image Solutions:

- Provides multiple chip and detailed scenario choices

AI Empowerment:

- ESP-PDD:MIC/SPK/LCD small module for rapid upgrade and evaluation of existing products

- AT Commands:Simple and quick integration

|

- Process Architecture Introduction:

ESP chips as the edge side mainly implement data collection, preliminary processing, and transmission. Due to processing performance limitations, LLM-related processing still relies on cloud servers. Below is the overall system architecture:

Edge Tasks |

Cloud Tasks |

|---|---|

- Data Collection and Preliminary Processing: Real-time collection and preliminary processing of voice and image data through Espressif chips;

- Local AI Model Inference: Deploy lightweight models for offline or low-latency processing;

- Real-time Transmission: Utilize full-duplex RTC protocol to ensure timely data transmission to the cloud.

|

- Large Model Training and Deep Analysis: Perform complex calculations on collected data to provide intelligent decision support;

- Algorithm Updates and Optimization: Cloud computing resources used for model iteration, real-time feedback to the edge;

- Remote Updates and Maintenance: Implement continuous system updates and maintenance through OTA and other methods.

|

- Privatization Deployment Value:

Can accelerate testing and improve stability

Combined with embedded devices, can achieve low-latency, high-privacy smart home experience

Downstream enterprises can quickly integrate intelligent interaction capabilities by purchasing complete deployment solutions, lowering technical barriers and shortening product implementation cycles

Privatization Deployment Architecture:

Common LLM Application Scenarios

Application Scenario |

Product Form |

Recommended Solution |

|---|---|---|

Plush/Desktop Pet Toys |

- With Screen: Supports expression/interaction display

- Without Screen: Pure voice and action interaction

|

- ESP-Eyemoji: Cute expressions, voice interaction

- ESP-Spot: Lightweight without screen, supports gestures

|

Smart Speaker |

- Single Mic: Near-field interaction

- Dual Mic: Sound source localization

|

- ESP-Hi: Ultra-low cost single mic solution

- EchoEar: Dual microphone array, far-field wake-up

|

Automotive Applications |

- Single Screen: Driving information, cute eye expression display

- Dual Screen: Driving information, dual eye expression display

|

- EchoEar: Single-screen voice interaction

- ESP-Gogo & ESP-Eyemoji: Dual screen voice interaction

|

AI Empowerment for Existing Products |

SPK/MIC/LCD Small Module |

- ESP-PDD: Rapid AI product formation/evaluation

- AT Commands: Simple and quick integration

|

ESP-Spot

ESP-Spot:ESP-Spot is an AI action voice interaction core module based on ESP32-S3 / ESP32-C5, focusing on voice interaction, AI perception, and intelligent control. It not only has offline voice wake-up and AI dialogue functions but also can achieve doll touch perception through ESP32-S3’s built-in touch/proximity sensing peripherals. The device has a built-in accelerometer that can recognize postures and actions, enabling richer interactions.

Related Links:

Related Videos: https://www.bilibili.com/video/BV1ekRAYVEZ1/?share_source=copy_web&vd_source=819d03f30389b111c508986ee27e0332

Features:

Screenless solution, focusing on voice and action interaction

Low cost, no display screen

Can expand panel to dual-screen ESP-Gogo and ESP-Eyemoji

S3/C5 dual adaptation, can push C5 5GHz

ESP-SparkBot

ESP-SparkBot:ESP-SparkBot is based on ESP32-S3, integrating voice interaction, image recognition, and multimedia entertainment. It can transform into a remote control car, play with local AI, support large model dialogue, real-time video transmission, and HD video projection. Powerful performance, endless fun!

Related Links:

ESP-Hi

ESP-Hi:ESP-Hi is a high-integration AI voice solution based on ESP32-C3, using ESP32-C3’s built-in ADC as the microphone collection device and I2S PDM directly as audio output, achieving low board-level material cost.

Description:

High Integration: Uses ESP32-C3’s built-in ADC as the microphone collection device. Uses I2S PDM directly as audio output, thus eliminating the need for external CODEC chip. Achieves low board-level material cost.

Low Resource Usage: Audio transceiver only uses 4 IO ports, uses very little CPU and memory, reserves sufficient resources for application development.

Multiple Interaction Methods: With screen and LED indicators, supports buttons, shaking, and voice wake-up.

Related Links:

Code Repository: Updating

Related Videos: https://www.bilibili.com/video/BV1BHJtz6E2S/

Features:

Currently the lowest board-level material cost AI voice solution

C3 wake word lightweight model, supports offline wake-up

ESP-P4 Phone

ESP-P4 Phone:A handheld device solution with screen based on ESP32-P4, combined with ESP-Brookesia’s Phone UI functionality, achieving Android-like system effects.

Hardware:

Main Control: ESP32-P4

Wi-Fi: ESP32-C6

LCD & Touch: 720P MIPI-DSI ILI9881 & GT911

Audio: 8311

Type-C: USB2.0

Related Links:

Code Repository: https://gitlab.espressif.cn:6688/ae_group/tools/esp-dev-tools/-/tree/feat/embedded_world_demo?ref_type=heads

Related Videos: To be added

Features:

720P high-resolution touch screen

“ESP32-C6 + ESP-Hosted” Wi-Fi solution

Android-like system effect, provides common functionality (such as network configuration) App

EchoEar

EchoEar: EchoEar Meow Companion is a smart AI development kit created by Espressif in collaboration with the Volcano Engine Button Large Model team. It is suitable for voice interactive products such as toys, smart speakers, and smart control centers that require large model empowerment. The device is equipped with the ESP32-S3-WROOM-1 module, a 1.85-inch QSPI round touch screen, and a dual microphone array, supporting offline voice wake-up and sound source localization algorithms. Combined with the large model capabilities provided by the Volcano Engine, Meow Companion can achieve full-duplex voice interaction, multimodal recognition, and intelligent body control, providing a solid foundation for developers to create a complete end-side AI application experience.

Hardware:

ESP32-S3

Related Links:

LLM Hardware Solution Summary

Audio Input Solution Comparison Table

Solution No. |

Solution Type |

Resource Usage |

Cost |

Effects and Recommended Application Scenarios |

|---|---|---|---|---|

1 |

Digital Microphone (MSM261S4030H0R etc.) |

1 I2S (3 pins) |

High |

|

2 |

Dedicated Audio ADC + Analog Microphone (ES7210) |

1 I2S + 1 I2C (5 pins) |

Medium-High |

|

3 |

CODEC + Analog Microphone (ES8311 etc.) |

1 I2S + 1 I2C (5 pins) |

Medium |

|

4 |

Internal ADC + Op-amp + Analog Microphone |

1 Internal ADC (1 pin) |

Lowest |

|

Audio Output Solution Comparison Table

Solution No. |

Solution Type |

Resource Usage |

Cost |

Effects and Recommended Application Scenarios |

|---|---|---|---|---|

1 |

I2S Digital Amplifier (MAX98357A etc.) |

1 I2S + 1 PA control (4 pins) |

Medium |

|

2 |

CODEC + Analog Amplifier (ES8311 + NS4150) |

1 I2S + 1 I2C + 1 PA control (6 pins) |

Low |

|

3 |

I2S PDM + Analog Amplifier (NS4150) |

2 I2S pins + 1 PA control (3 pins) |

Lowest |

|

The above audio solutions can be freely combined, but if customers are in the design phase, considering cost and performance, only the following solutions are recommended:

Voice Solution Recommendation Comparison Table

Category |

Type Description |

Solution Features |

Recommended Hardware |

Reference Development Board |

|---|---|---|---|---|

Optimal Cost |

Analog Mic + OPA Internal ADC Audio Collection + I2S PDM Output |

|

ESP32-C3 / ESP32-C5 |

ESP-HI |

Balanced Choice |

Single Microphone + External Codec Chip (e.g., ES8311) |

|

ESP32-S3 / ESP32-P4 / ESP32-C5 |

|

Best Performance |

Multiple Microphones + External Decoder + External Audio ADC Chip (e.g., ES8311 + ES7210) |

|

ESP32-S3 / ESP32-P4 |

AI Vision Hardware Solution Comparison Table

Interface Type |

Camera Performance |

Supported Chips |

Reference Development Board |

Features |

|---|---|---|---|---|

SPI |

Low Resolution Image (max 320×240) |

ESP32-S3 / ESP32-C5 / ESP32-C3 |

ESP32-S3-EYE |

|

DVP (Parallel) |

Low to Medium Resolution (e.g., VGA, 720p) |

ESP32-S3 / ESP32-P4 |

ESP32-S3-EYE |

|

USB |

Low to High Resolution (depends on USB camera) |

ESP32-S3 / ESP32-P4 |

ESP32-S3-USB-OTG |

|

MIPI CSI |

High Resolution Image (e.g., 1080p, 4K) |

ESP32-S3 / ESP32-P4 |

ESP32-P4-Function-EV-Board |

|

In summary: ESP32-C3 / ESP32-C5 only support SPI interface cameras, currently adapted to BD3901 camera. For ESP32-S3 with clarity requirements, DVP camera is first recommended, for ESP32-P4, MIPI camera is first recommended.

AI Perception Solution

Tactile Perception

Adding tactile interaction to AI products can significantly enhance user experience. In categories such as plush toys and desktop companions, it is recommended to use capacitive touch sensors to achieve “touch perception”.

Experience Advantages: Structurally, it can achieve a “zero presence” feeling, triggering without the need for forceful pressing, making the interaction more natural. It is suitable for children and weak operation scenarios.

Chip Support: The ESP32-S3 / ESP32-P4 comes with built-in Touch capacitive sensing peripherals, which can directly drive touch electrodes without the need for a dedicated touch IC. The ESP32-C3 / ESP32-C5 does not have built-in Touch. If tactile interaction is required, an external capacitive touch chip or button solution can be used.

Reference Design: You can refer to the hardware and software implementation of ESP-Spot. The code repository provides examples of touch detection and AI linkage.

Posture Sensing

Through the IMU (Inertial Measurement Unit), the device can have the ability of “attitude awareness”, which is used for sleep wake-up and interaction enhancement.

Typical Applications: Detect actions such as picking up/putting down, tilting, shaking, etc., to implement “wake up on pick up”; Combined with algorithms, it can recognize gestures such as nodding, shaking head, flipping, etc., enriching the interaction dimension of screenless or small screen products.

Selection Suggestions: A commonly used 6-axis IMU (such as the LSM6DS series) can meet most scenarios; if a magnetometer is needed for absolute orientation, a 9-axis IMU can be chosen.

Reference Design: Both ESP-Spot and ESP-SparkBot integrate IMU, which can be used as a reference for sleep wake-up and simple posture interaction.

Screen and UI

The number of screens and resolution should be chosen based on the product form and UI complexity. You can refer to the driver solutions and interface descriptions in LCD Application Solution.

Resolution and Performance: The higher the resolution and the more frequent the refresh rate, the greater the CPU/bus and memory usage. In applications with wake words, voice recognition, or local AI, sufficient CPU margin must be reserved for audio and AI tasks to avoid screen refresh-induced lag or wake-up delay.

Typical Configuration: Entry-level products commonly use 0.96”~1.54”, 160×160 or 240×240; products with emoticons or complex UI can choose 1.85”, 360×360 or higher resolution. For specifics, please refer to the resource usage description in the document and adjust according to actual measurements.

Note

The resource usage given in the LCD Application Solution is based on a “screen-only” scenario. If the actual product runs wake-up words, voice front-end, TTS or visual algorithms at the same time, sufficient CPU and memory margin should be reserved.

Get the AI product moving

If you need to give your AI product the ability to perform actions (such as turning its head, waving its hand, moving its mechanical arm, etc.), you can choose the type of motor based on the requirements of force, precision, and cost.

Servo: Simple angle control, unified interface, suitable for head rotation and simple limb movements. See the reference design at ESP-Hi.

DC Motor: Suitable for continuous rotation scenarios such as wheeled movement and fans, it needs to be used in conjunction with a driver and an optional encoder for speed/position control. See the design reference at ESP-SparkBot.

Stepper Motor: With a small step angle and high positioning accuracy, it is suitable for applications that require precise angles (such as pan-tilt units, pointing mechanisms). See the reference design at EchoEar.

Robotic Arm: A robotic arm solution combining servo motors and stepper motors. Refer to ESP32P4-Robotic-Arm.

The aforementioned reference designs all include hardware connections and software driver examples, which can be trimmed and expanded after selecting the product form.

Introduction to Wake Word

The current ESP32-C3 / ESP32-C5 and ESP32-S3 / ESP32-P4 all support local wake words; different series have differences in model scale and front-end algorithms, and the choice needs to be balanced with performance and cost.

ESP32-C3 / ESP32-C5: The wake word model they carry is smaller in size, and due to computational limitations, the front-end AFE (Audio Front-End, such as noise reduction, VAD, etc.) has been trimmed. Overall, the wake-up rate and noise resistance are weaker than S3/P4; suitable for applications that do not require high wake-up sensitivity and are cost-sensitive.

ESP32-S3 / ESP32-P4: Uses a larger wake word model and retains a more complete front-end AFE process, resulting in better wake performance. Moreover, the wake word can be accelerated by the extended AI instruction set, which takes up less CPU usage, making it easier to run in parallel with tasks such as voice recognition and TTS.

The reference design can refer to ESP-Hi and EchoEar, which respectively cover entry-level and high-performance voice interaction scenarios.

Edge AI Information Sharing

Voice Capability Enhancement

- Wake word support related repository references:

TTS supports low-cost custom wake words (expected to be released by end of May)

Vision Capability Enhancement

Supports local YOLO model operation

Can implement basic object detection functionality

Large Model Integration Examples